In order to get an overview of various techniques of Image

Classification, I trained models to perform image classification using different

techniques on CIFAR 10 data set. This post shares the learnings from this

experiment.

1)

FEATURE EXTRACTION + MACHINE LEARNING

CLASSIFIER:

Before the rise of deep learning in the last

10 years, this was basically what people used to do to classify images: They

first used some feature extractor to convert each H x W x C dimensional image

into a modest size feature vector that would try to capture all the information

of the image that we require to solve the classification problem. This feature

vector is then fed to a machine learning classifier like, say SVM with a nonlinear

kernel.

To use this method I used a concatenation

of Histogram of Oriented Gradients (HOG) and Colour Histogram. Since HOG

captures the texture information of an image and ignores colour, and Colour

Histogram captures colour information and ignores texture, a concatenation of

these two methods gave me a good enough feature vector to work with. On these

feature vectors I tried running classifiers like Random Forests, Shallow ANNs,

and SVM with linear and RBF kernels. Of these, SVM (with RBF kernels) gave me the

best accuracy of a modest 58 percent on a 10 fold cross validation.

2)

A SHALLOW CNN TRAINED FROM SCRATCH:

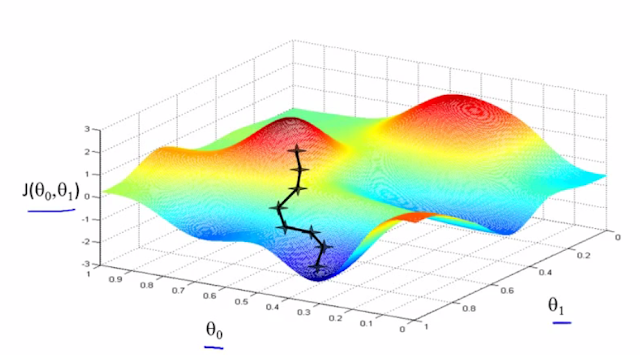

The next thing I tried and was particularly

excited about was training my own CNN model. Because of limited hardware

resources, I could only train a minimally deep model of the architecture

conv-relu-pool—conv-relu-pool---conv-relu-pool---FC—FC-softmax. I added

dropouts in between so as to not over fit, final error function was of course

cross entropy, since we have softmax at the end. After experimenting with

different learning rates (advisable to decrease in steps of 10 as in 0.1, 0.01,

0.001, 0.0001) and optimizers (Stochastic Gradient Descent with and without

momentum, Adam, AdaGrad, RMSprop, etc.) The best I could do was close to 70

percent validation and test accuracy. This is quite an increase in accuracy as

compared to the earlier approach, but the computation was a lot more expensive

than before. To get this in context, training with the first approach took less

than half an hour, while the conv net took the whole night to train on my PC.

For implementing this I used Keras, which

is a high level wrapper on TensorFlow or Theano and is quite useful for fast

prototyping of generic deep learning model.

3)

TRANSFER LEARNING:

Transfer learning is basically piggybacking

on previously trained models on different generic data sets and customizing

them by finetuning for your own application. There are quite a number of very

deep models trained on multiple GPUs whose weights are open source online like

AlexNet, VGGnet, GoogLeNet, etc. These models were trained for the ImageNet

challenge on a much larger data set to classify into a much larger number of

classes. We use transfer learning to tweak them to perform well on CIFAR 10

data. Transfer learning basically comes in two flavours. The first option is

that you use the trained model as a feature extractor of our image data and run

a softmax regression or SVM on top of it. This gave an accuracy of 55 percent

which is less than that what we got by using HOG and colour histogram as

feature extractors. The other and more commonly used approach is to use the

previously trained model as initialization for and fine tune layers of that

network on our data. This gave me an accuracy of 80 percent, with the same

computation time as that of the approach where I trained the CNN from scratch.